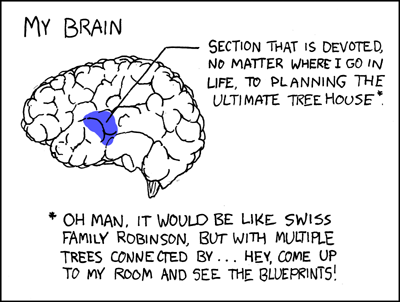

My Brain

From xkcd - A webcomic of romance, sarcasm, math, and language.

read more and comment..

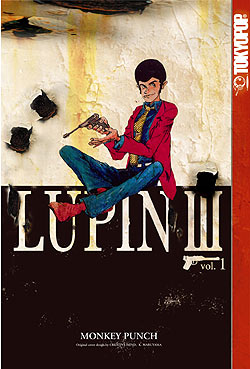

The Extraordinary Adventures of Arsène Lupin, Gentleman-Burglar

I recently finished listening to the Librivox recording of The Extraordinary

Adventures of Arsène Lupin, Gentleman-Burglar. Librivox make it available as a free podcast, but I notice

the book is also available from Amazon.

This is Maurice Leblanc's classic. The Sherlock Holmes/Hercule Poirot who took the other path in life!

I first encountered the character in the Japanese anime Lupin III by Kazuhiko Kato.

read more and comment..

The fake David Blaine tapes

I can see the future. Whether you love or hate the real David Blaine, you are going to rofl when you watch these.

David Blaine Street Magic: YouTube Edition!

David Blaine Street Magic part 2 : youtube Edition!

read more and comment..

Here we go ..

Prata. The king of foods. Best served hot and slightly crispy, with a masala curry and sweet teh tarik. Its cheap..

quick.. satisfying..

That's what this blog is going to be about. Its my place to post quick satisfying stuff, no subject out of bounds.

read more and comment..