LEAP#018 The Fretboard - a multi-project build status monitor

(blogarhythm ~ Diablo - Don't

Fret)

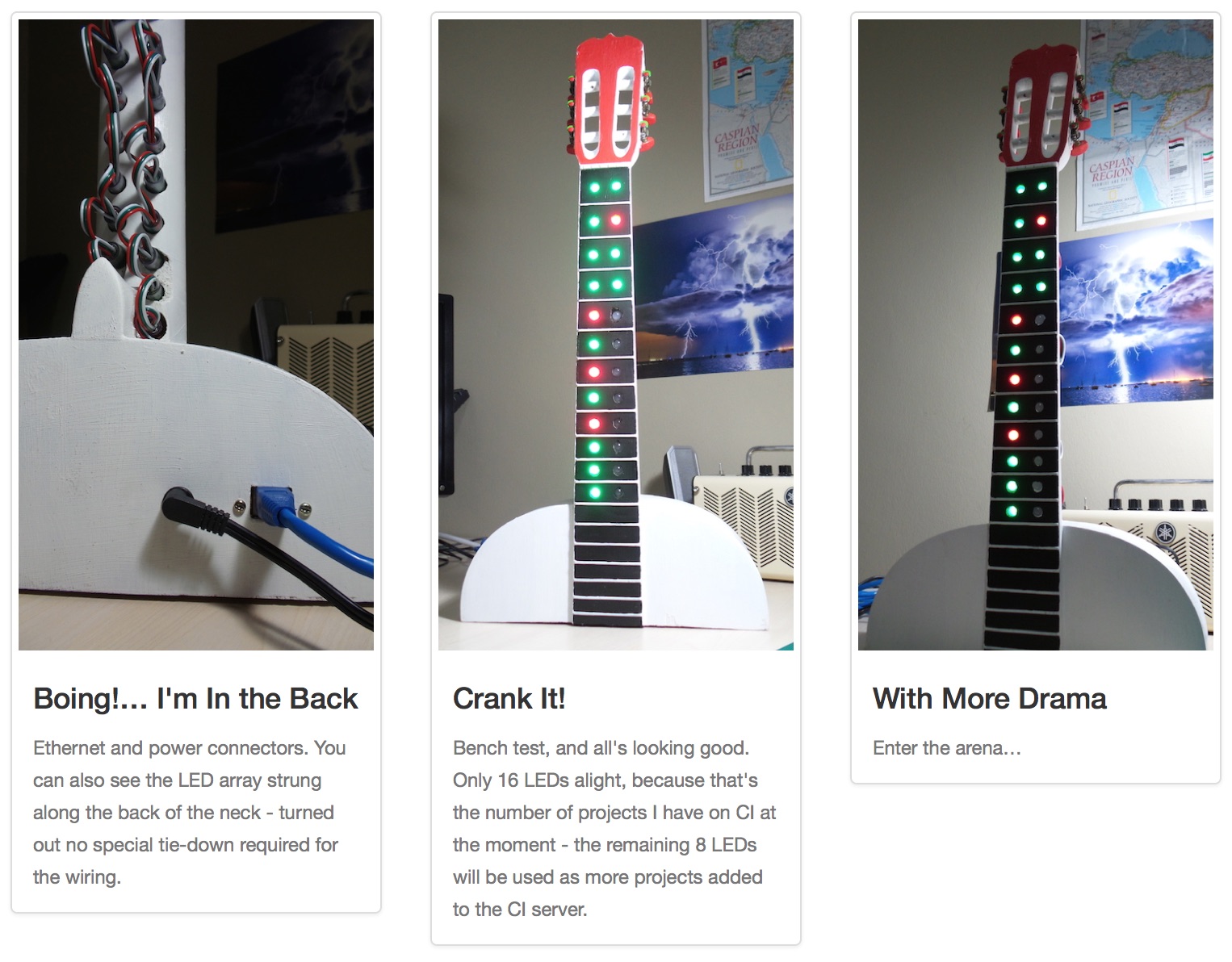

The Fretboard is a pretty simple Arduino project that visualizes the build status of up to 24 projects with an

addressable LED array. The latest incarnation of the project is housed in an old classical guitar … hence the name

;-)

All the code and design details for The Fretboard are open-source and available at fretboard.tardate.com. Feel free to fork or borrow any ideas for your own build.

If you build anything similar, I'd love to hear about it.

read more and comment..

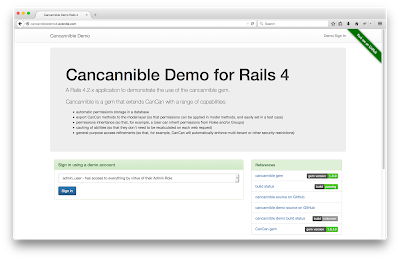

cancannible role-based access control gets an update for Rails 4

Can You Keep a Secret? / 宇多田ヒカル

cancannible is a gem that has been kicking around in a few

large-scale production deployments for years. It still gets loving attention - most recently an official update for

Rails 4 (thanks to the push from @zwippie).

And now also some demo sites - one for Rails 3.2.x and another for Rails 4.3.x so that anyone can see it in action.

So what exactly does cancannible do? In a nutshell, it is a gem that extends CanCan with a range of capabilities:

- permissions inheritance (so that, for example, a User can inherit permissions from Roles and/or Groups)

- general-purpose access refinements (to automatically enforce multi-tenant or other security restrictions)

- automatically stores and loads permissions from a database

- optional caching of abilities (so that they don't need to be recalculated on each web request)

- export CanCan methods to the model layer (so that permissions can be applied in model methods, and easily set in a test case)

read more and comment..

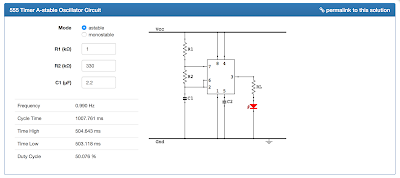

555 Timer simulator with HTML5 Canvas

The 555 timer chip has been around since the '70s, so does the

world really need another website for calculating the circuit values?

No! But I made one anyway. It's really an excuse to play around with HTML5 canvas and demonstrate a grunt & coffeescript toolchain.

See this running live at visual555.tardate.com, where you can find more

info and links to projects and source code on GitHub.

(blogarhythm ~ Time's Up / Living Colour)

read more and comment..

Learning Devise for Rails

(blogarhythm ~ Points of Authority /

Linkin Park)

I recently got my hands on a review copy of Learning Devise for Rails from

Packt and was quite interested to see if it was worth a recommendation (tldr:

yes).

A book like this has to be current. Happily this edition covers Rails 4 and Devise 3, and code examples worked fine for

me with the latest point releases.

The book is structured as a primer and tutorial, perfect for those who are new to devise, and requires only basic

familiarity with Rails. Tutorials are best when they go beyond the standard trivial examples, and the book does well on

this score. It covers a range of topics that will quickly become relevant when actually trying to use devise in real

life. Beyond the basic steps needed to add devise in a Rails project, it demonstrates:

- customizing devise views

- using external authentication providers with Omniauth

- using NoSQL storage (MongoDB) instead of ActiveRecord (SQLite)

- integrating access control with CanCan

- how to test with Test::Unit and RSpec

I particularly appreciate the fact that the chapter on testing is even there in the first place! These days, "how do I test it?" should really be one of the first questions we ask when learning something new.

The topics are clearly demarcated so after the first run-through the book can also be used quite well as a cookbook. It does however suffer from a few cryptic back-references in the narrative, so to dive in cookbook-style you may find yourself having to flip back to previous sections to connect the dots. A little extra effort on the editing front could have improved this (along with some of the phraseology, which is a bit stilted in parts).

Authentication has always been a critical part of Rails development, but since Rails 3 in particular it is fair to say that devise has emerged as the mature, conventional solution (for now!). So I can see this book being the ideal resource for developers just starting to get serious about building Rails applications.

Learning Devise for Rails would be a good choice if you are looking for that shot of knowledge to fast-track the most common authentication requirements, but also want to learn devise in a little more depth than a copy/paste from the README and wiki will get you! It will give enough foundation for moving to more advanced topics not covered in the book (such as developing custom strategies, understanding devise and warden internals).

read more and comment..