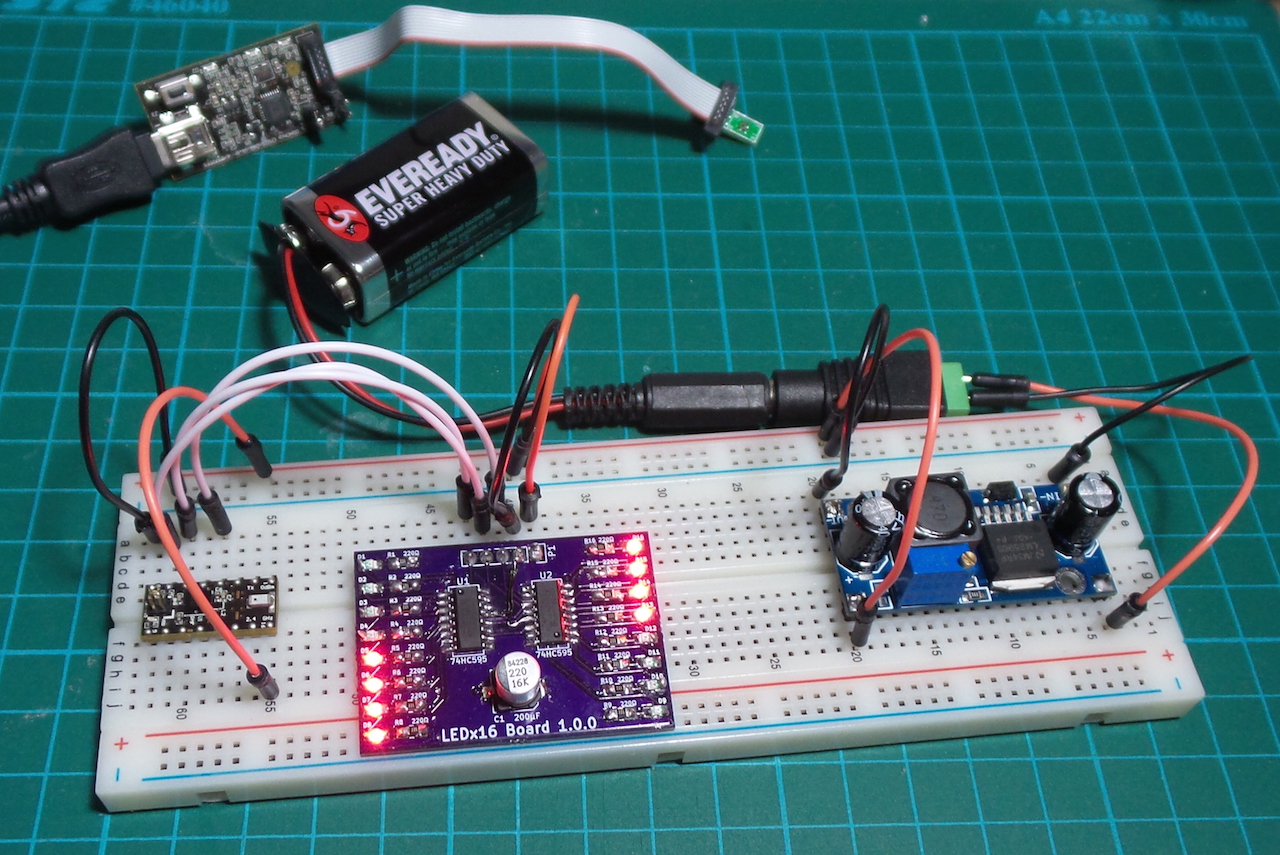

LEAP#216 OSHChip driving SPI LED module

How easy is SPI with the OSHChip? I thought I'd find out by first controlling a module that has a very basic SPI-ish

slave interface.

I'm using the LEDx16Module that I designed in the KiCad like a Pro course from Tech Explorations. It has dual 74HC595

shift registers that can be driven with SPI to control 16 onboard LEDs.

As always, all notes,

schematics and code are in the Little Electronics & Arduino Projects repo on GitHub.

read more and comment..

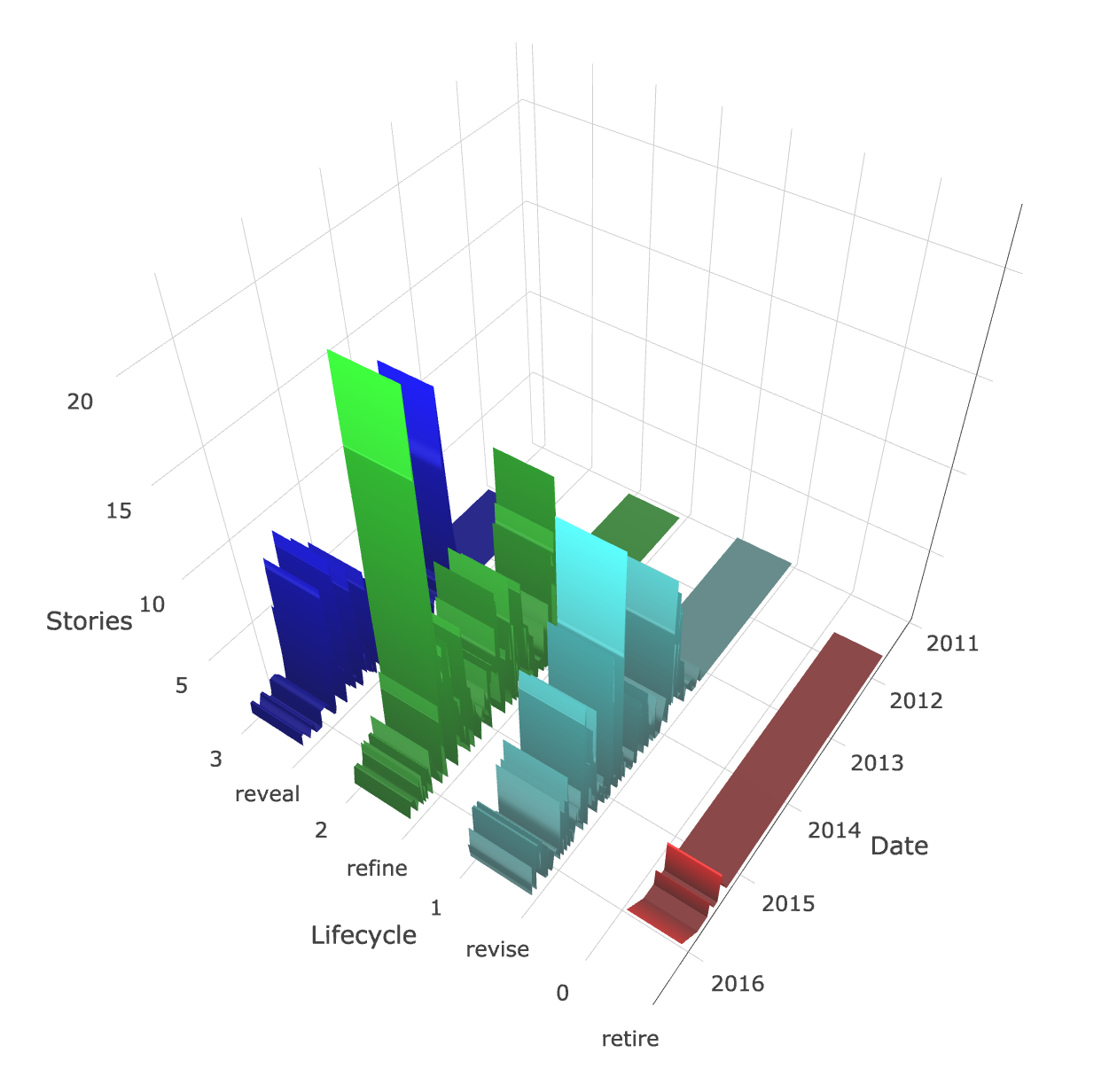

Feature Lifecycle Analysis with PivotalTracker

Can you trust your agile planning process to deliver the best result over time?

In an ideal world with a well-balanced and high-performing team, theory says it should all be dandy. But what happens

when the real world sticks it's nose in and you need to deal with varying degrees of disfunction?

Feature Lifecycle Analysis is a technique I've been experimenting with for a few years. The idea is to visualise how

well we are doing as a team at balancing our efforts between new feature development, refinement, maintenance and

ultimately feature deprecation.

If you'd like to find out more, and run an analysis on your own projects, try out the Feature Lifecycle Analysis site. It includes some analysis of two real software

development projects, and also a tool for analysing your own PivotalTracker projects.

As always, all notes, schematics and code are on

GitHub.

read more and comment..

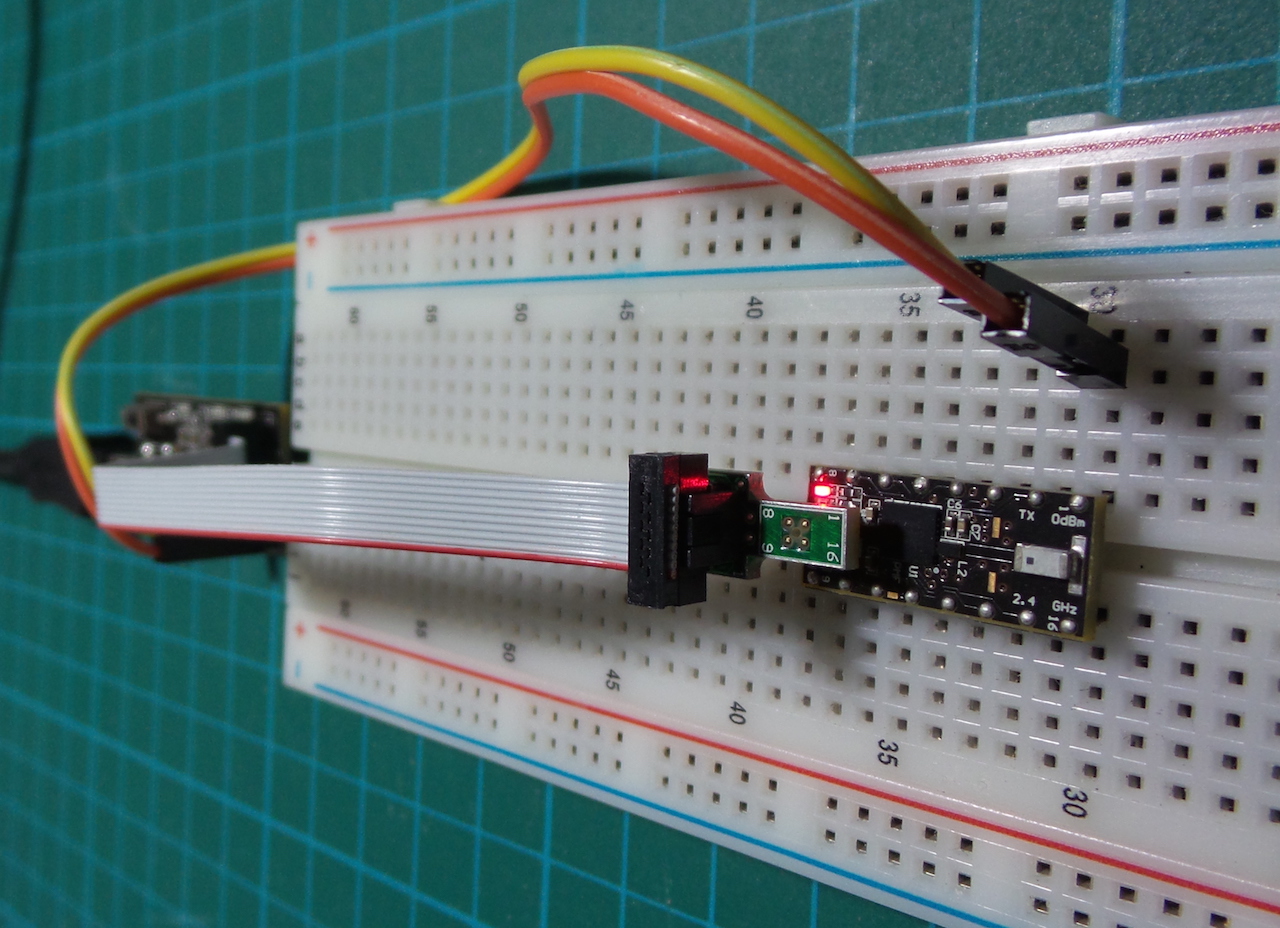

LEAP#215 OSHChip yotta toolchain

After bruising myself on the raw gcc toolchain - and although I got a program running - I think I want my toolchain to

do more of the hard work for me!

So next I tried yotta, the software module system used by mbed OS. Building a simple program using the Official Yotta

target for OSHChip and gcc on MacOSX proved quite straight-forward.

As always, all notes,

schematics and code are in the Little Electronics & Arduino Projects repo on GitHub.

read more and comment..

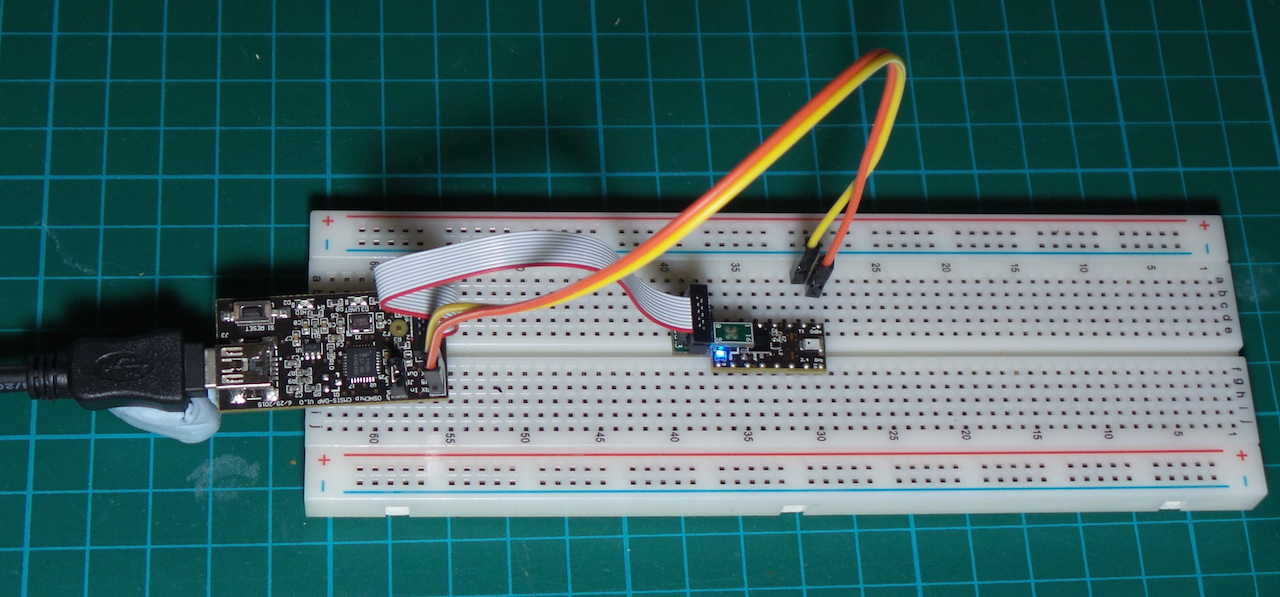

LEAP#214 OSHChip gcc toolchain

Can I build a program for the OSHChip using the gcc toolchain and Nordic Semi SDK on MacOSX?

Yes..ish!

Here are my notes and scripts for compiling and deploy a simple program, but there remain a few rough edges. There are

probably easier ways to do this ... like using the Official Yotta target for OSHChip using gcc ... but I was curious to

see how far I could get with just gcc and the Nordic Semi SDK.

As always, all notes,

schematics and code are in the Little Electronics & Arduino Projects repo on GitHub.

read more and comment..